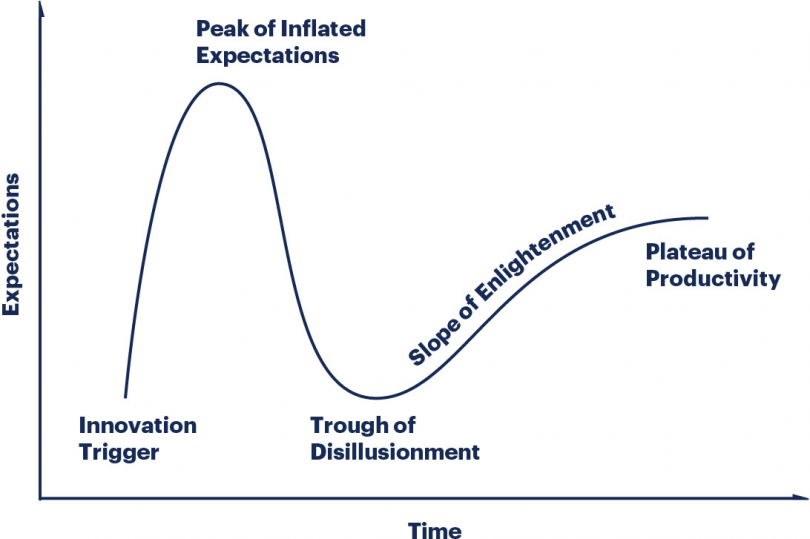

On the “People vs Algorithms” podcast, Brian Morrissey recently mentioned that we should view the reaction to AI in the context of the Gartner Hype Cycle – which was new to me.

It’s a graphical representation of the phases of adoption of and reaction to new technologies.

Phase 1 is the Technology Trigger. This is when a new technology attracts attention and there’s a lot of excitement about it – positive or negative. We might imagine a connected world with the Internet of Things, new immersive experiences with virtual reality, or a decentralized digital currency with blockchain. In the case of AI, we worry about Skynet and murderous robots.

Phase 2 is the Peak of Inflated Expectations. This is where we get the exaggerated, almost messianic claims about the technology. There are lots of unrealistic projections and too much enthusiasm. The Segway was going to change cities. Virtual reality would allow the disabled to participate in more of life. AI will eliminate everyone’s job.

Phase 3 is the Trough of Disillusionment, which reminds me of the slough of despond in Pilgrim’s Progress. Following on that theme, the proverb says “hope deferred makes the heart sick.” Once we realize the technology won’t live up to its exaggerated expectations, we feel let down. There are security or regulatory problems. People don’t like it as much as they thought they would. It doesn’t integrate with other systems very well.

Phase 4 is the Slope of Enlightenment. The technology matures, and we see there are some practical applications and benefits. The technology starts to gain more realistic and sustainable uses, and organizations start to understand its value. People figure out new ways to use the technology, and it works well in particular niches.

Phase 5 is the Plateau of Productivity. At this point the technology reaches mainstream adoption and becomes part of everyday life or business operations. It’s mature and stable, with well-established practices and a wide user base.

When it comes to AI, we’ve had the technology trigger. I think we’re still climbing towards the peak of inflated expectations. But the trough of disillusionment is coming, which means we’ll start to find problems.

- It lacks generalized intelligence.

- It hallucinates too much.

- It has way too much bias.

- It has very limited contextual understanding.

- It’s not nearly as creative as we had hoped.

- It doesn’t have a good grasp of basic ethics.

- It’s too much of a black box. We don’t understand how it works, so we can’t trust it.

- It gets boring.

But then we’ll start to find reasonable tasks for AI. It won’t be as scary, and we won’t expect too much of it. But it will be useful.

That, my friends, seems like the probable path for AI.

Sources

The People vs. Algorithms Podcast

Brian Morrissey on Twitter